Rating foundations

The Foundation Practice Rating project is using data to improve diversity, accountability, and transparency in charitable organisations

The Foundation Practice Rating project is using data to improve diversity, accountability, and transparency in charitable organisations

Charitable foundations are often not very diverse, accountable, or transparent. This is because most of them already have their own funds and are not required or incentivised to disclose much information nor listen to their grantees. However, without that sort of feedback, it can be hard for foundations to learn and improve.

The Foundation Practice Rating is working to improve foundation practices in the three important areas of diversity, accountability, and transparency. To this end, it has created and publishes an annual rating of 100 UK grant-making foundations.

The research and rating is done without the permission or involvement of the assessed foundations, which is unprecedented and very unusual because foundations typically control almost all the communications about their own organisation.

FPR’s originator, Danielle Walker-Palmour, director of the UK’s Friends Provident Foundation, describes this control a “a function of our relative power”.

FPR upends this power structure because the foundations profiled do not choose to be assessed, they cannot opt out, and the ratings are always published in full.

This makes FPR quite different to other ratings, such as the Grantee Perception Report, which is commissioned by the foundations themselves, and more like a newspaper ranking of business schools.

FPR is funded by a group of UK foundations – which are among the foundations assessed – has run three times now: publishing results in 2022, 2023 and 2024. The criteria are public and a great checklist for any foundation for assessing its own practices.

The research for FPR is done by Giving Evidence, an independent consultancy and research organisation led by Caroline Fiennes. Each year, it selects 100 UK grant-making foundations to assess. This year, the 100 foundations assessed had collective net assets of £61.6bn, and annual giving of £2bn.

The cohort is drawn afresh each year and comprises three parts:

First, the foundations (approximately 12) that fund FPR. This is to avoid them being accused of subjecting other foundations to scrutiny which they shun themselves.

Second, the five UK foundations with the largest giving budgets. This is because they dominate foundation giving in the UK.

Third, a random selection taken from the UK’s community foundations and the largest 300 foundations. Giving Evidence organises the cohort such that a fifth of the foundations assessed are in the top quintile by size, a fifth are in the second quintile by size, etc. This is to ensure FPR gives a representative view of the foundation sector.

Foundations can also opt in, although they are not included in the main cohort of 100 to avoid bias. This year, three foundations asked to be assessed.

The first principle underlying the research is that it takes the stance of a someone seeking a job or grant from that foundation. Therefore, it only uses material which is publicly available to that applicant. The researchers look at material or documents on the foundation’s website, and annual reports and accounts filed with the charity regulator. The research does not involve any survey.

Each foundation is examined by two researchers independently, followed by a process to resolve any discrepancies. Each researched foundation is then sent the data about it, to enable it to point out any evidence that we have missed.

The second principle is that the research is as neutral as possible. The set of foundations from which FPR draws its random sample is not constructed by the FPR team, but rather from an existing list. Equally, most of the criteria and thresholds in FPR are drawn from existing sources and rating systems, such as the Racial Equality Index.

Moreover, FPR runs a public consultation every year to gather ideas for changes to its scope, or items to add or remove or change. This gives confidence that FPR truly assesses factors which charities consider important.

FPR has 56 criteria, which contribute to the scores. The team also collect data on 42 other questions which do not relate to scores but are used in the process: for example around size of the foundation’s team, and its website address.

“The criteria vary,” Caroline Fiennes explains. “Some are basic, like having a website, and publishing details of funding areas. Some are more advanced, such as having targets for improving diversity, or soliciting and acting on feedback from grantees.”

Foundations are exempt from criteria not relevant to them. For example, a foundation with no or few staff is exempt from publishing data about its gender pay gap.

Each foundation is given a rating of A, B, C or D (with A being the highest and D being the lowest) on each of the three domains (diversity, accountability, and transparency), plus an overall rating.

All four ratings for each of the 100 foundations are published, along with various analyses. FPR also publishes examples of good practice and advice on doing well.

“FPR takes a positive approach, intending to be a stimulus and resource for improvement,” Caroline says, adding: “FPR is a rating rather than a ranking. This is deliberate because a ranking is zero-sum: if somebody rises, somebody else must fall – whereas FPR is very happy if everybody rises, which is indeed the goal.”

Foundations have welcomed the FPR, “which is a surprise”, says Fiennes, because not everyone welcomes unsolicited scrutiny or appraisal. Many foundations have said that FPR has enabled them to finally make changes which they had long thought necessary, or that it has bumped some issues up the priority list, or that it has highlighted some issue(s) hitherto overlooked.

Some foundations use the FPR criteria as a checklist for self-assessment. Fiennes described this as “fabulous” because she had not envisaged the project “having that effect”.

Every criterion has been met by at least one foundation. This shows that FPR is not asking for anything impossible.

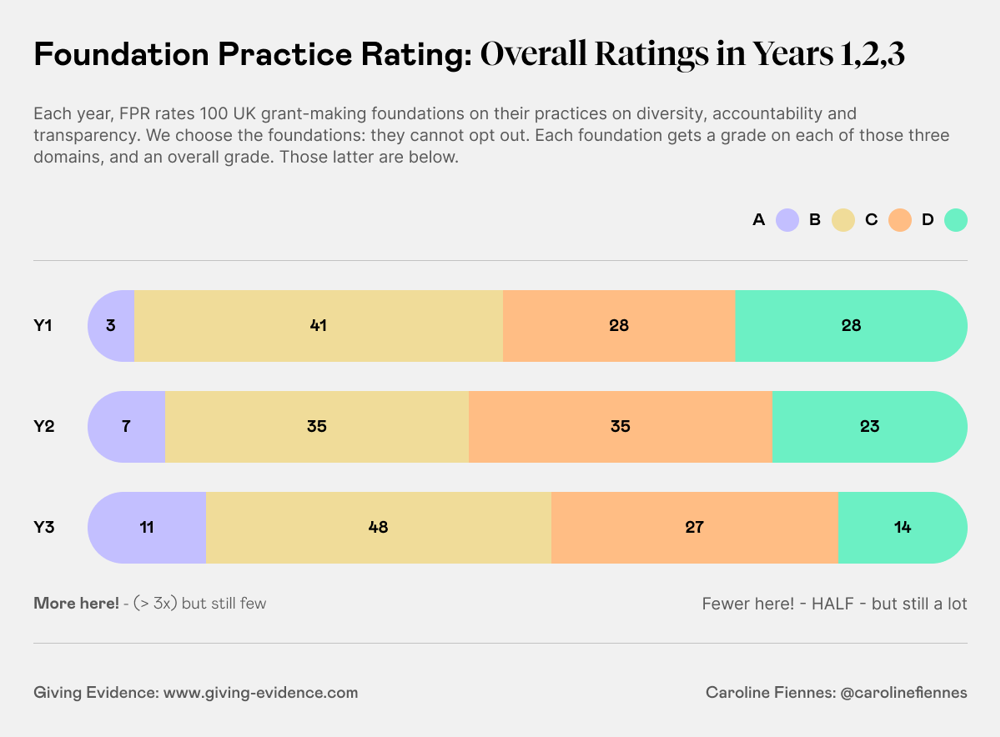

So far, foundation practices are improving. The graph shows the overall ratings for FPR’s first three years: the number of foundations scoring A overall has more than tripled from three to 11; the number scoring D overall has halved, from 28 to 14. That is excellent news – even if FPR may only be one of many contributory factors.

“FPR takes a positive approach, intending to be a stimulus and resource for improvement."

Caroline Fiennes, founder, Giving Evidence

There are other improvements too: fewer foundations scored D in all three domains this year than in previous years (nine this year, compared to 23 foundations last year). Also, the number of foundations scoring no points at all on diversity has fallen - from 16 in Year One to 11 in Year Three.

And the number of assessed foundations which have no website has fallen - from 22 last year to 13 this year. Remarkably, one foundation which has no website is attached to Goldman Sachs, the giant investment bank.

It was included by random selection in Year One and again in Year Three. Many of the improvements are statistically significant and not due simply to changes in the cohort from year-to-year.

In each of the three years, the foundations scoring A overall are a diverse set. They include Wellcome, one of Europe’s largest foundations; a much smaller endowed foundation; and a community foundation. This shows that good practices do not require a particular size or structure.

Financial size (assets or giving budget) does not correlate with scores. For example, three of the UK’s five largest foundations scored only C overall this year. However, the number of staff in a foundation does affect ratings.

In all three years, foundations scoring D overall are disproportionately have been those with no paid staff, whilst no foundation with more than 50 staff members has scored a D. Much the same is true of trustees: foundations with more trustees perform better.

This year, overall Ds were confined to foundations with fewer than 10 trustees, and particularly common amongst foundations with five or fewer trustees. (Remember that foundations with few staff or few trustees are exempt from some criteria.) It’s not immediately clear why these patterns arise, but it may be that good practices in diversity, accountability and transparency require work, which is impossible with too few personnel.

Most foundations can choose how many staff they have – whereas they cannot choose their financial size – and it looks as though having too few people is a false economy, which impairs practice on factors which nonprofits say matter.

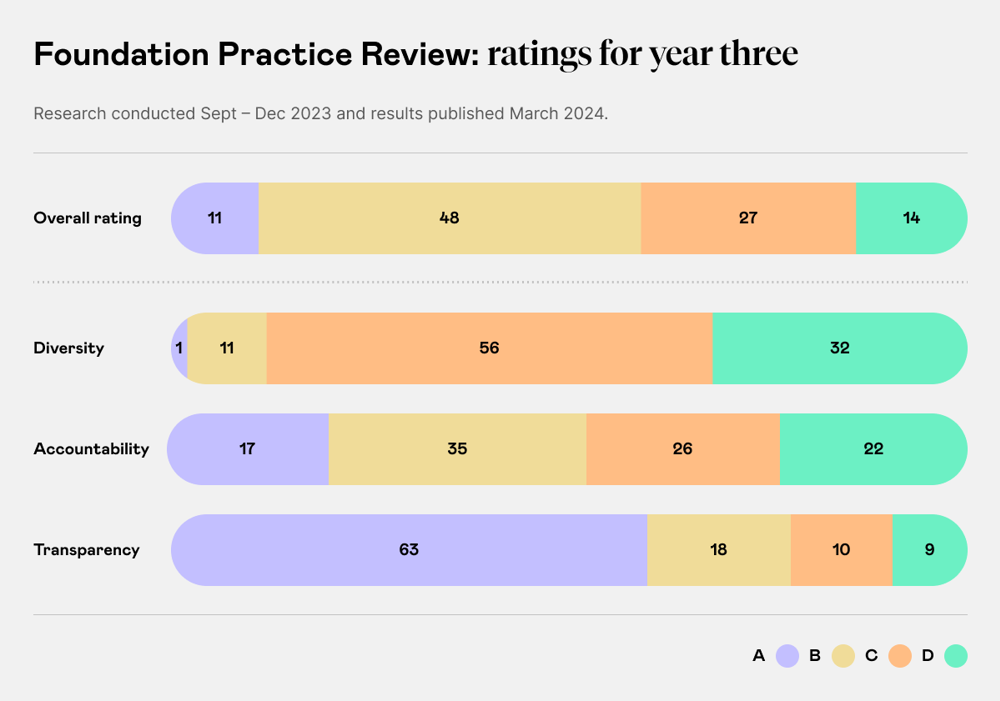

Diversity – which encompasses accessibility – e.g., whether materials can be read on the foundation website without a mouse – is the weakest domain by far. The graph below shows the breakdown of ratings for Year Three (research conducted in Sept – Dec 2023; results published in March 2024).

In each of the three years, almost all the 10 criteria on which foundations collectively scored worst have been in diversity. In Years One and Two, none of the 100 foundations assessed scored an A on diversity. But there is good news of improvements here too: this year, the first time, a foundation did achieve an A on diversity.

In fact, that foundation – the Community Foundation for Tyne & Wear and Northumberland – also achieved an A on both the other two domains, giving it a clean sweep of AAA. Importantly, that community foundation never considered FPR as a threat, but rather as a tool to improve - and it used the criteria for self-assessment.

Community foundations consistently out-perform the average foundation, presumably because they are designed around community accountability and have to raise their funds.

“FPR is a rating rather than a ranking. This is deliberate because a ranking is zero-sum: if somebody rises, somebody else must fall."

Caroline Fiennes, founder, Giving Evidence

In addition to seeing the improvements in foundations’ ratings, FPR also receives direct feedback from foundations.

Responses often sound like this: “I work at Foundation X, which you recently reviewed. Thank you for the assessment – we have found it really useful, and you’ve highlighted a lot of areas where we can improve. We will be looking at our website and other channels over the coming weeks to make some positive changes in the light of your findings.”

Foundations also say that FPR has prompted them to changes practices. Cornwall Community Foundation wrote to say: “Thank you very much for including us in the Practice Rating… Your findings are very helpful for improving our practices and the information on our website.”

Caroline says she has also heard these kinds of reactions from foundations which have not been assessed. “Clearly this feedback is not definitive proof of systematic change across the board,” she says. “But it is nonetheless heartening by indicating that FPR is having its desired effect of encouraging at least some foundations to improve their practices.”

The FPR will continue its annual ratings. That means that any large UK foundation could be assessed in any year and receive an externally-imposed incentive to improve. As of now, more than half of the UK’s eligible foundations have been assessed at least once.

The consultation to inform next year’s research is open until end May 2024, and we will heed the responses in determining next year’s criteria and approach – though our preference is to change fairly little between years in order to avoid changing the goalposts for foundations.

Any foundation is welcome to use the criteria to assess itself and identify areas for improvement: most are relevant to any grant-maker.

FPR could potentially be replicated in any country, perhaps with some adaptions. So if you are inspired by what you read here or think FPR could be a useful tool where you are - either as a voluntary toolkit or as an annual process - do get in touch. There is much to do!

Four ways to score well (and improve your foundation)

Source: Foundation Practice Rating

Caroline Fiennes is the founder and director of Giving Evidence, which encourages and enables giving based on sound evidence. Giving Evidence does advice and research for donors and foundations in many countries, and runs the research operation of the Foundation Practice Rating (FPR). A Visiting Fellow at Cambridge University, Caroline is the author of the acclaimed book about philanthropy “It ain’t what you give, it’s the way that you give it”, and is a regular contributor to international media outlets writing on philanthropy and charitable giving.

Caroline Fiennes is the founder and director of Giving Evidence, which encourages and enables giving based on sound evidence. Giving Evidence does advice and research for donors and foundations in many countries, and runs the research operation of the Foundation Practice Rating (FPR). A Visiting Fellow at Cambridge University, Caroline is the author of the acclaimed book about philanthropy “It ain’t what you give, it’s the way that you give it”, and is a regular contributor to international media outlets writing on philanthropy and charitable giving.

It's a good idea to use a strong password that you're not using elsewhere.

Remember password? Login here

Our content is free but you need to subscribe to unlock full access to our site.

Already subscribed? Login here